NEWS

2021.11.29

Toyota achieves downsizing and lightening AI technology with a paper accepted as a Human Pose Estimation in IEEE FG2021 with a top conference of Human activity analysis

- High accuracy yet low-power consumption and hardware embeddable portability in Human Pose Estimation with computer vision research -

●Latest related new

●Hiring (the web is Japanese)

In Tokyo: Data analysis and Recognition/Identification architecture in Embedded Vehicle/Cloud system

Toyota Motor Corporation

Data Analysis Group

InfoTech, Connected Advanced Development Div., Connected Company

Masayuki Yamazaki (Project Manager, R&D senior engineer)

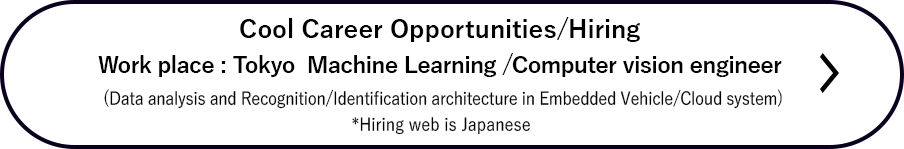

Toyota Motor Corporation has developed an image recognition AI technology for Human Pose Estimation. Our technology was accepted by IEEE FG2021 (IEEE International Conference on Automatic Face and Gesture Recognition 2021), the highest authority conference in the field of Human activity analysis and artificial intelligence, and will be presented on December 15-18, 2021. This technology has achieved the higher inference accuracy for the least amount of computation in light-weight Human Pose Estimation methods within Microsoft COCO person keypoints dataset is a well-known open dataset.

Human Pose "Measurement" technology, also known as a motion capture technology, has been used in various industries to generate data on the position and amount of movement of human body parts by attaching a measuring instrument to the body. However, there are many issues using the measuring instrument for Human Pose "Measurement". These are the delicate mounting and calibration of the measuring instrument, the preparing time, and the physical strain of mounting it for people.

In recent years, with the development of machine learning technology, another motion capture technology using image recognition AI has become the mainstream, without using the above the measuring instruments, and is now called Human Pose "Estimation" technology.

It has become easier to immediately convert human movements into data, and has realized simultaneous skeletal and behavioral estimation for multiple people. Since the appearance of neural networks, Human Pose Estimation technology has attracted the attention of research institutes and companies, and there is a global development race in the world.

This technology is one of fundamental technology in Human activity analysis, and has many applications are now more widely used in society.

- - Scene Understanding for ADAS,In-Cabin of Vehicle, etc.

- - Video image analysis, behavior recognition, prediction and forecasting, and anomaly detection technologies for surveillance cameras, video streaming, and video camera systems.

- - Robotics, Human Communication, Human Interaction

- - Video applications with AR / VR / XR, etc.

- - Sports pose analysis, estimation of physical workload in factory and nursing care, rehabilitation support, etc.

Our presented technology achieves a significant reduction in the amount of neural network operations, based on the premise of embedded implementation in low-power devices, high-efficiency parallel operations in data centers, and hardware portability/device dependency reduction. At the same time, as a configuration that does not depend on any particular hardware vendor, we used only general operations of neural networks.

In general, these measures are accompanied by a significant decrease in accuracy, but we have achieved a high balance between light weight, generalization, and inference accuracy by rethinking the neural network configuration and devising a machine learning algorithm.

In addition, after this technology was accepted at FG2021 Round 1, the author was selected as a paper reviewer for Round 2, and he have been cooperating with this FG2021. We are developing knowledge and techniques for AI development and lightweight/embedded developments together Sigfoss corporation as co-author since 2017. Through our research and development with start-up and tech companies, we continue to contribute to the safety and convenience of the mobility society, the realization of a decarbonized society, and the development of a sustainable society.

– Key technologies –

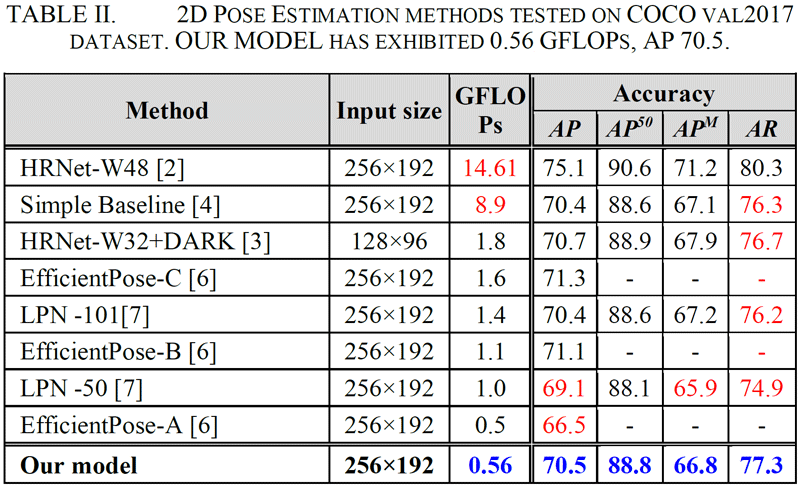

We have succeeded in making the neural network smaller and lighter by combining the coordinate interpolation process as a post-processing of the neural network operation. 2D Human Pose Estimation is a task that considers a input image as a two-dimensional plane and estimates the two-dimensional coordinates (x,y) corresponding to the skeletal points of the people in the input image.

Many conventional methods directly calculate the skeletal point coordinates with high resolution using neural networks, which are computationally expensive. In our presented technology, skeletal point coordinates are calculated with coarse resolution using a small, lightweight, and simple neural network, and then estimated by interpolating skeletal point coordinates with high accuracy using a relatively simple coordinate interpolation method called Taylor expansion.

Also, many conventional methods require expensive hardware with high flexibility devices such as GPUs, because them have special calculation and large neural networks. Our presented technology has only general operations of neural networks such as the basic convolution operations. Therefore, it can be embedded in a many hardware, and can also be embedded in low-power operations and inexpensive hardware. In the accepted paper, we have included examples of embedded implementation and measurement on NVIDIA Jetson AGX and Xilinx FPGA.

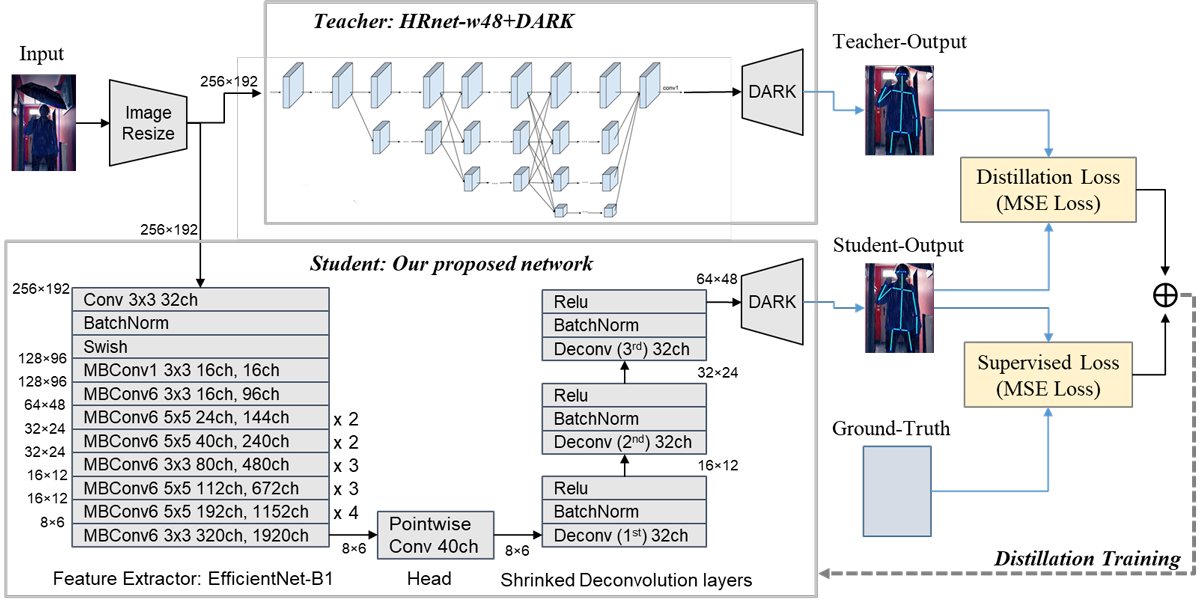

Furthermore, we devised a learning method called Distillation Learning. We organized a relationship our selected higher accuracy conventional method has as a teacher, and our proposed method is as a student with training neural network. and by making it easy for the student to learn the correct answer from the teacher, just like the relationship between the teacher and the student, we have achieved high inference accuracy even though the neural network is small, lightweight, and simple. By making it easier for the student to learn the correct answer from the teacher, just like the relationship between a teacher and a student, we have achieved high inference accuracy in a small, lightweight, and simple neural network.

– Features –

The roles within the neural network are divided into three main categories.

- (1) The front part that plays the role of extracting feature extraction

- (2) The middle part that plays the role of accumulating the output of (1)

- (3) The back part calculate the final result using (2) as input.

The same configuration is used in object detection and skeleton detection in general.

The conventional lightweight method is to use a highly efficient method such as MobileNet, EfficientNet, or HRNet for (1). While large computation in (2), simply reducing the weight of (2) directly leads to a significant decrease in accuracy.

In our presented the technology, we achieved significantly reduce the amount of operations in (2) and (3) while suppressing the decrease in accuracy by additionally the post-processing with coordinate interpolation has the expansion of the receptive field of the neural network. we have verified and have achieved our method as a novel method.

– Achievement –

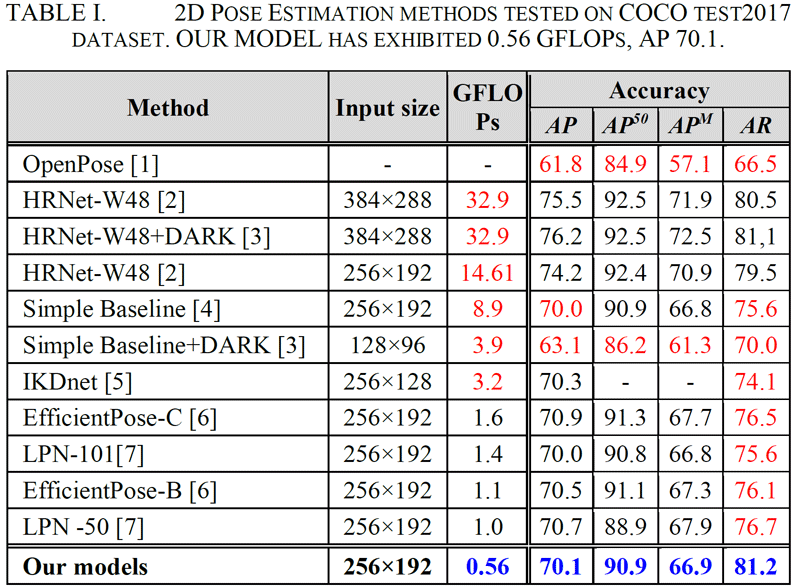

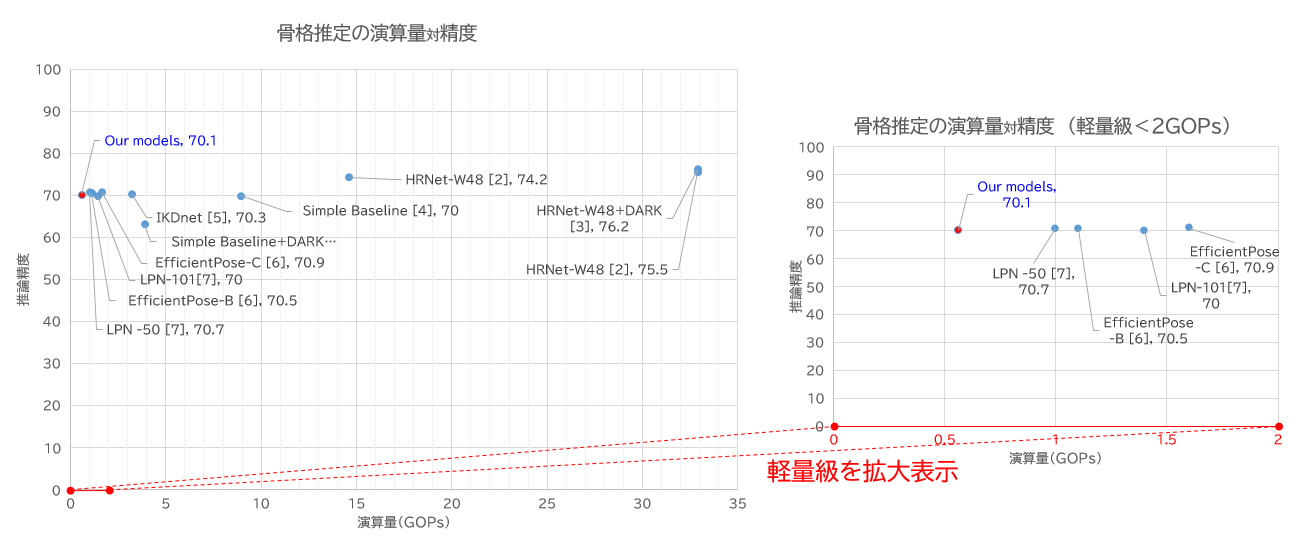

We applied our presented method to Microsoft COCO person keypoints dataset(COCO), and recorded a score of AP 70.1pt、AR 81.2pt in COCO validation dataset, AP 70.5pt、AR 77.5pt in COCO test dataset. Our presented method achieved the highest inference accuracy for a compact and lightweight method with less than 1 Mega GOPs. In addition, the configuration does not depend on any specific hardware vendor, so it can be implemented with commercially available low-power devices such as NVIDIA Jetson AGX and Xilinx FPGA.

- - Presented day: December 15-18th, 2021

- - Conference: FG2021(IEEE International Conference on Automatic Face and Gesture Recognition 2021)

- - Title:Rethinking deconvolution for 2D Human Pose Estimation Light yet accurate model for Real-time Edge computing

Thank you.

■Introduction Our R&D group

Data Analysis Group, InfoTech, Connected Advanced Development Div.

Our group is developing actual systems and services based on machine learning using image data obtained from embedded cameras inside and outside the vehicle and vehicle behavior (CAN) data. For example, our technology for road obstacle detection based on image data of the embedded cameras was ranked 1st in the world in a quantitative evaluation using public datasets, and was accepted to ACCV2020, one of the most important conferences in computer vision. Also, our technology for detecting and tracking people and objects in videos pixel by pixel won 1st place in the world in a competition for object recognition technology, and was adopted for CVPR2021, one of the most important conferences in computer vision.

In addition, our group is parallel developing on deploying some edge devices (i.e. Xavier, Google Edge TPU) using network compression technologies (quantization, pruning, distillation), and on distributed and parallel processing using middleware such as Kubernetes (for the purpose of operating the technology in the cloud) to release our planned new services and architectures.

In this way, we believe the strength of our group is that we are able to conduct research and development activities that cover a wide range of areas, from upstream design as our specialty areas to operation as collaboration areas, in order to realize a certain system or service.

Research and development in data analysis techniques based on machine learning, especially in image processing, has already resulted in the existence of numerous public data sets. Also, the competitions using these public datasets are regularly held, and the world's leading research institutions and universities and other public research institutions including big tech companies called "GAFAM," are competing in the world. However, it is not necessarily the case that the world's No. 1 AI-related technology obtained in this competition will directly lead to good user experiences for our customers. Because, while these AI-related technologies can perform very well in certain limited conditions, they generally have low generalization performance and are not practical to use. In other words, in many cases it strongly depends on the public dataset and high computational cost is significant in real use cases. Therefore, researchers and developers have to extract the "essence" of the AI-related technologies and repeatedly "kaizen" (optimize) to fit our customer’s use cases.

We believe that the moment when the knowledge and know-how gained through this very unique and steady process is reflected in actual systems and services, and leads to good user experiences " satisfactory, comfortable, and enjoyable" for our customers, is our moment of fulfillment (the real thrill/exciting) and our motivation to continue research and development.

■Cool Career Opportunities/Hiring

The business domain of data analysis using machine learning and image recognition is one of our focus areas. Along with the improvement of ICT and cloud technologies and in-vehicle cameras, our scope of application is expanding from vehicles and vehicles to urban development and mobility services.

Our group is looking for new staffs who can enhance their expertise in a particular technical area (AI-related technology) while also expand (or try to expand) coverage and collaboration area based on that technical area.

For example, one of the projects in which our group is involved is the advanced development of a crew-less system (i.e. automatic door opening/closing, departure judgment) for an automated shuttle bus which is called e-Palette with MaaS (Mobility as a Service). The elemental technologies for realizing this system include human detection from images, posture estimation, and abnormal behavior detection. A number of promising methods have already been proposed in the research field of computer vision for these elemental technologies. However, simply combining these elemental technologies is not enough to make a system. In other words, a mechanism is needed to dynamically allocate the functions of these elemental technologies, sometimes on the cloud side, sometimes on the edge side, depending on the situation of the vehicle (i.e. driving, getting in and out, stopping).

With customers value, our mission is to maximize user experiences of our customers by building our "optimal architecture" hat incorporate the essence of these technologies and the needs of our customers, while keeping abreast of the latest research on elemental technologies.